Chet sheets

https://cheatography.com/irohitpawar/cheat-sheets/aws-services/pdf/

BurstCreditBalance

If you have a large number of clients, the BurstCreditBalance metric can be used to determine if you are saturating the available bandwidth for the file system.

EBS : DAS / EFS : NAS (NFS)

EBS : GP2, ST1,PIOPS

EFS : GP , MAXIO

autoscaling

The services covered by Unified Auto Scaling are EC2, Spot Fleets, DynamoDB, Aurora Read Replicas, and ECS on Fargate.

S3 et TLS

S3 buckets only allow the default AWS certificate for serving objects via TLS.

In order to maintain 11 9s durability, how many times does S3 replicate each object within the storage class scope? 3 fois

Z-IA (One Zone-Infrequent Access) has an availability SLA of 2.5 9s, the lowest of all S3 storage classes.

S3-Infrequent Access is described in the SLA documentation as being designed for 4 9s availability and 11 9s durability.

S3 Enabling cross-region replication does not copy existing objects by default, and so it is up to the customer to perform this task.

scp : service control policy

An Organizations SCP is a policy document that specifies the maximum permissions allowed for the member accounts in the affected directory structure.

Glacier has automatic server-side encryption enabled on all objects. The customer gets a choice of keys that can be used but cannot choose whether encryption is enabled or not.

VPC gateway and interface endpoints use a proxy to pass traffic to the service API endpoint, bypassing the AWS network entirely.

AWS organizations support both accounts created as part of an organization and invited accounts that were previously standalone.

Internet gateway: free

An Internet gateway is a fault-tolerant virtualized resource with no visibility from the customer perspective.

VPC Peering connection charged by the hour

The VPC peering connection is documented as exhibiting no single point

of failure and is a redundant resource, which means it is fault

tolerant.

The VPG (virtual private gateway) resource is hardware backed in two data centers within the region to which the VPC is deployed. It uses active/passive tunnels in a highly available mode but is not fault tolerant.

The VPC network is a region-scoped object, and many of the resources attached to it are scoped at the same level, while others (such as subnets) are scoped at the AZ level.

VPC NACL : blacklist au niveau du firewall du SUBnet

VPC peering connections are able to cross regions (and even accounts). The transit gateway object allows for cross-region communication, but only via VPN and only directly between two transit gateways.

VPC peering connections in the same region do not use encryption, but the connections are labeled as private. Cross-region connections use encryption and also use the AWS private network.

While the VPC gateway endpoint is free, it can only be used to reach S3 and DynamoDB resources in the same region as the VPC.

Each Direct Connect link can be configured for 1Gb or 10Gb throughput.

There are Direct Connect providers that offer up to 1Gb, but they are

resellers, not natively part of the service.

Elastic Load Balancer, even if it is taking no traffic, is still charged by the hour.

Elastic Load Balancer scales out by adding discrete resources, which requires short delays.

The Elastic IP is a free resource as long as it is attached to a running EC2 instance. If the instance is stopped or the Elastic IP is detached, there is an hourly charge.

EFS The service charges for data used rather than for volume size provisioned.

EFS : GP ou maxIO

While it is only achievable in some regions, the maximum throughput for EFS is 3Gbps.

EFS : activer encryption at rest au momrnt de la cration du FS

For an EFS file system, what is the maximum number of recommended concurrent transfers per client? AWS recommends up to 40 concurrent transfers per client, with a hard limit of 250Mbps per client overall.

EBS standard volumes are designed for cheap, slow storage of up to 1TB per volume, but you are charged for consumed input/output operations per second (IOPS), which can be a consideration.

EBS volumes are an AZ-scoped resource. Any writes are synchronously

written to two different storage units in different data centers.

EFA (Elastic Fabric Adapter) : max perf network

On-demand instances are not bound by any contract or agreement and can be modified at any time, including on termination, without any penalty.

For latency-sensitive operations, you can achieve 100 to 200ms latency for S3 transfers,

Spot instances are well designed for short workloads that require a large amount of compute resources.

Placement groups can be configured to use multiple AZs within a region.

Which pillar of the Well-Architected Framework includes the principle “Measure overall efficiency”? cost optimization

Serverless architectures use resources only when absolutely necessary, thus exhibiting a high degree of performance efficiency.

The architecture is described as fan-out because the distribution of work through the topic to the queues physically looks like a fan.

When using Lambda functions as part of a decoupled architecture, which

two pillars of the Well-Architected Framework are addressed by the

concurrency setting on each function?

= Performance efficiency and cost optimization

When you launch a new EC2 instance, the EC2 service attempts to place the instance in such a way that all of your instances are spread out across underlying hardware to minimize correlated failures. You can use placement groups to influence the placement of a group of interdependent instances to meet the needs of your workload. Depending on the type of workload, you can create a placement group using one of the following placement strategies:

-

Cluster – packs instances close together inside an Availability Zone. This strategy enables workloads to achieve the low-latency network performance necessary for tightly-coupled node-to-node communication that is typical of HPC applications.

-

Partition – spreads your instances across logical partitions such that groups of instances in one partition do not share the underlying hardware with groups of instances in different partitions. This strategy is typically used by large distributed and replicated workloads, such as Hadoop, Cassandra, and Kafka.

-

Spread – strictly places a small group of instances across distinct underlying hardware to reduce correlated failures.

Route 53 has an uptime SLA of 100%

AWS recommends leaving Predictive Auto Scaling in forecast-only mode for at least 48 hours, but each application is unique, and some applications might require longer.

General Purpose performance mode file systems have the lowest metadata

latency and perform best for jobs like a UNIX find command.

When designing a DynamoDB table, which of the following is an advantage of deploying a global table? Lower latency of table access

When deploying multiple masters for a relational database, it is possible to direct a higher number of write queries to the underlying database because the compute and storage tiers are separate infrastructures.

DB SLA : Redshift SLA is 3 9s, RDS with Multi-AZ is 3.5 9s, and an EC2 instance is just 1 9. Aurora Multi-Master has an availability SLA of 4 9s.

DynamoDB allows for static provisioning of read and write ops to force an upper boundary on performance.

Because the data is replicated into each region configured for the global table, the data exhibits increased durability and resilience.

In the Auto Scaling service, only launch templates support a mixture of on-demand and spot instances at the same time.

CloudFront is a CDN that is designed to improve the overall performance of a web application and as a result, the user experience by lowering latency and increasing throughput to access web assets.

KMS (key management system) : Region scoped

SSO : domaine amazon.com ne supporte pas le SSO

Securité génrale (SCP) sec control policies. PRendre les réponses ciblées en DENY

Securité génrale : Any user with root credentials has full, unimpeded access to all AWS resources in the account.

Database encryption : au niveau de chaque instance

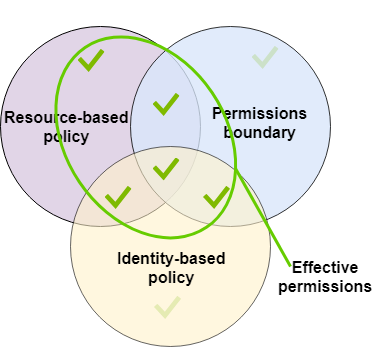

Permission bounaries ( attachées au user ou au role) et definit plus finement les accès à un type de ressources

sc1, or cold-storage HDD, is designed for infrequent access at a low price and supports up to 16TB per volume.

IAM roles can be used for granting temporary permissions within the same

account, between accounts, and between services but cannot be used to

grant anonymous access, as an IAM role requires a principal ID.

The Customer master keys (CMK) cannot be used without a key policy in place, with statements for both key administrators and key users.

S3 encryption

Server-Side Encryption with Amazon S3-Managed Keys (SSE-S3)

When you use Server-Side Encryption with Amazon S3-Managed Keys (SSE-S3), each object is encrypted with a unique key. As an additional safeguard, it encrypts the key itself with a root key that it regularly rotates. Amazon S3 server-side encryption uses one of the strongest block ciphers available, 256-bit Advanced Encryption Standard (AES-256), to encrypt your data. For more information, see Protecting data using server-side encryption with Amazon S3-managed encryption keys (SSE-S3).

Server-Side Encryption with KMS keys Stored in AWS Key Management Service (SSE-KMS)

Server-Side Encryption with AWS KMS keys (SSE-KMS) is similar to SSE-S3, but with some additional benefits and charges for using this service. There are separate permissions for the use of a KMS key that provides added protection against unauthorized access of your objects in Amazon S3. SSE-KMS also provides you with an audit trail that shows when your KMS key was used and by whom. Additionally, you can create and manage customer managed keys or use AWS managed keys that are unique to you, your service, and your Region. For more information, see Protecting Data Using Server-Side Encryption with KMS keys Stored in AWS Key Management Service (SSE-KMS).

Server-Side Encryption with Customer-Provided Keys (SSE-C)

With Server-Side Encryption with Customer-Provided Keys (SSE-C), you manage the encryption keys and Amazon S3 manages the encryption, as it writes to disks, and decryption, when you access your objects. For more information, see Protecting data using server-side encryption with customer-provided encryption keys (SSE-C).

Performance penalty with encryption : REDSHIFT ( au niveau hardware)

Mirroring du trafic au niveau interface réseau.

instances dediées = pas d'elasticité

No comments:

Post a Comment